Modern applications owe their smooth deployments and management to Kubernetes, a game-changer in container orchestration. Originally devised by Google and now overseen by the Cloud Native Computing Foundation, it's become the go-to choice for streamlining container environments. Dive into this guide to grasp the fundamentals of Kubernetes and set up your own local development playground to unlock its potential.

Kubernetes, also known as K8s, is a robust container orchestration technology that has transformed the way modern applications are deployed and managed. It was created by Google and is now managed by the Cloud Native Computing Foundation (CNCF). It has become the de facto standard for container orchestration. In this article, we'll walk you through the basics of Kubernetes and show you how to set up your local development environment so you can start leveraging its features.

Understanding Kubernetes

Kubernetes is all about container management, which encapsulates applications and their dependencies in lightweight units. It automates container deployment, scaling, and management, making it easier to design, distribute, and scale applications swiftly.

At the core of Kubernetes are several fundamental concepts:

Pods: These are the smallest deployable units in Kubernetes. They can contain one or more containers, tightly coupled and sharing network and storage.

Services: Services enable network access to a set of Pods. They load balance traffic across multiple Pods, making it easier to manage and scale applications.

Replication Controllers: These ensure that a specified number of replica Pods are running at all times. If a Pod fails, the Replication Controller replaces it.

Nodes: Nodes are the worker machines in a Kubernetes cluster where containers run. They form the infrastructure that powers your applications.

Setting Up a Local Kubernetes Environment

To begin your journey with Kubernetes, you need a local development environment for experimentation. Fortunately, some tools allow you to set up a local Kubernetes cluster effortlessly. Two popular options are Minikube and Docker Desktop with Kubernetes:

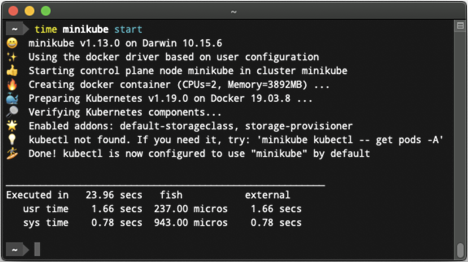

Minikube: Minikube is a tool that allows you to run a single-node Kubernetes cluster on your local machine. It’s an excellent choice for those just getting started with Kubernetes.

Docker Desktop with Kubernetes: If you’re already familiar with Docker, you can enable Kubernetes as an option in Docker Desktop, providing an easy way to run a Kubernetes cluster locally.

Let’s walk through setting up a local Kubernetes environment with Minikube. To install the latest Minikube stable release on x86-64 Windows using .exe download:

Download and run the installer for the latest release.

Or if using PowerShell, use this command:

New-Item -Path 'c:\' -Name 'minikube' -ItemType Directory -Force |

Add the minikube.exe binary to your PATH and make sure to run PowerShell as Administrator.

$oldPath = [Environment]::GetEnvironmentVariable('Path', [EnvironmentVariableTarget]::Machine) |

If you used a terminal (like Powershell) for the installation, please close the terminal and reopen it before running Minikube.

Deploying Your First Application

With your local Kubernetes cluster up and running, it’s time to deploy your first application. In Kubernetes, applications are defined in YAML manifests that specify the desired state of the application.

Here’s a simple example of a YAML manifest for a “Hello, Kubernetes” web application:

apiVersion:apps/v1 |

This manifest defines a Deployment with three replicas, which ensures that three instances of the “Hello, Kubernetes” web application are running. It uses an NGINX image to serve web content. You can deploy this application using the kubectl command-line tool, which is your primary interface for interacting with Kubernetes clusters.

Here’s the command to deploy the application from the manifest:

kubectlapply-fhello-k8s.yaml |

After running this command, Kubernetes will create the necessary resources to ensure that three NGINX instances are running.

Scaling and Load Balancing

One of Kubernetes' most appealing advantages is its ability to easily scale applications. Assume your "Hello, Kubernetes" web application is receiving more traffic than intended and needs to be scaled up to accommodate the load. Kubernetes makes this process simple.

To scale your Deployment, you can use the kubectl scale command. For example, to scale the Deployment to five replicas, you would use the following command:

kubectlscaledeploymenthello-k8s--replicas=5 |

Kubernetes will automatically create two additional replicas, ensuring that there are now five instances of your application.

Managing Configuration and Secrets

Kubernetes provides sophisticated tools for managing application settings and secrets. ConfigMaps and Secrets are two essential tools for these activities.

ConfigMaps: Configuration details can be separated from the application code using ConfigMaps. Configuration data can be stored as key-value pairs and mounted as files or environment variables in your containers.

Secrets: Secrets are used to securely store sensitive information such as API keys, passwords, and tokens. They are similar to ConfigMaps but are intended to safeguard sensitive data.

Here’s an example of a ConfigMap for a simple configuration:

apiVersion:v1 |

You can then reference these values in your application’s environment variables or configuration files.

Monitoring and Debugging

Monitoring and debugging are critical components of running Kubernetes applications. Kubernetes delivers a broad ecosystem of tools and procedures for assuring your applications' health and performance.

Monitoring Tools: Prometheus and Grafana are popular tools for gathering and displaying information from your applications and the Kubernetes cluster.

Log Access: Kubernetes provides commands for gaining access to container logs and troubleshooting information for your Pods.

Observability: It is critical to implement observability practices. This includes instrumenting your application code and infrastructure so that useful data can be collected.

Updating and Rolling Back Deployments

Kubernetes simplifies the process of updating your application to new versions or configurations. When you need to change your application, you can update the deployment by creating a new version. Kubernetes will handle the update process automatically while guaranteeing that your application remains operational.

Updating a deployment: You can update a deployment by editing its YAML manifest and then using kubectl apply to implement the changes.

Readiness and Liveness Probes: To manage how Kubernetes executes the update, you can create readiness and liveness probes. These probes determine when a new version is ready to be released and when an old version should be retired.

Rolling Back a deployment: If an upgrade causes problems, Kubernetes allows you to roll back to a previous known-good version. This can be a lifesaver in maintaining application stability.

Kubernetes Ecosystem

Kubernetes offers a robust ecosystem of complementary tools and projects. Here are a few worth looking into:

Helm: Helm is a Kubernetes package manager that streamlines application deployment and configuration management.

Kubernetes Operators: Kubernetes Operators are a technique to expand Kubernetes to support complicated stateful applications. They encode operational knowledge to automate application management.

Service Mesh: Technologies like Istio can improve network security and observability in your Kubernetes cluster.

Conclusion

We addressed the essentials of Kubernetes in this guide, from comprehending its core concepts to setting up your local development environment. We've also gone over how to deploy your first app, scale it, manage configuration and secrets, monitor it, and execute upgrades and rollbacks.

Kubernetes is a versatile platform that can help you simplify application deployment and management. Explore the broad ecosystem of tools and best practices that can help you realize Kubernetes' full potential as you continue your journey. As you learn more about Kubernetes, you'll discover that its capabilities go well beyond what we've described here. The Kubernetes community is vibrant and ever-changing, so you'll always have new resources and expertise to draw on as you work toward becoming a Kubernetes expert.

User Comments

I'm particularly interested in learning more about setting up a local development environment with Kubernetes. It would be great if the article could provide some guidance or resources on that topic. Overall, this article provides a solid introduction to Kubernetes and its basic concepts. Looking forward to reading more about it!